Gigabyte : The PCI-E 4.0 Bus Revolution

Bandwidth from 32 GB/s to 64 GB/s

New GRAID solution with high performance

Overcoming obstacles

Much more economical than the competition

Gigabyte : The PCI-E 4.0 Bus Revolution

Bandwidth from 32 GB/s to 64 GB/s

We went from 32 GB/s bandwidth to 64 GB/s bandwidth.

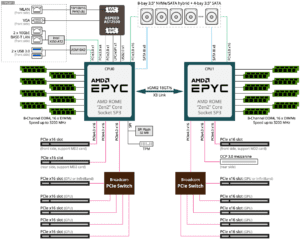

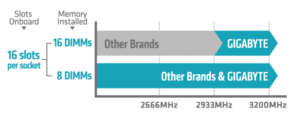

But not only this has grown, so has memory access: from 4 channels at 2666 Mhz, through 6 channels at 2933 Mhz, to 8 channels at 3200 Mhz. That is, put per processor and in GT/s (Gigatransactions per second), we have gone from the limit of 10.4 GT/s to 25.6 GT/s.

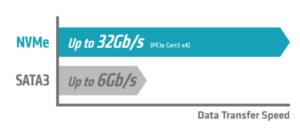

Of course, this needed to go hand in hand with the speed to disk and this, too, has increased in SSD disks. They no longer have the mechanical limitation and can go to persistent memory chip speed (no data loss), moving on from the limitation of the SATA bus, which could only deliver 6 Gb/s. Thus, obtaining disks of up to 100,000 IOPS, at 32 Gb/s, which allows disks in Gen 3 of up to one million IOPS (Input Output per second) and up to 2 million IOPS in Gen 4.

Logically, these speeds can also be boosted through RAID technologies, which allow multiple disks to be grouped together to obtain more performance and/or added security in RAID 0, 1, 10, 5 and 6. In this regard, there have been several approaches:

1st From Intel, with VROC, which allowed the processor to manage disk RAID in a hardware/software environment, from Gigabyte’s own bios and with an additional license.

In principle only 2 NVME SSD disks and with expander up to 24 disks, with the consequent load on the processor and sacrifice of control lanes. This was already seen in the 2nd generation scalable or known as CascadeLake and has been improved in the 3rd generation or IceLake.

2º By Broadcom, owner of the classic LSI Raid controller technology, which has a specialized RISC processor, which offloads the processor. Until now, these controllers were only capable of handling SATA3 and SAS3 disks. The new controllers that are being launched now also allow handling NVME disks, up to a maximum of 4 drives directly, being able to do RAID 0, 1 and 5. Logically, the new RISC processor is more powerful and will still be able to handle SAS/SATA disks and/or NVME disks, with certain limitations.

3rd NVIDIA has already launched this technology and the platforms will see the light for the first Q1 (Feb-Apr 2022, is company carries the quarters out of phase with respect to the natural ones), a new concept. The recent T1000 GPU has been redesigned and renamed the SupremeRAID™ SR-1000 for use as a RAID controller under a newly coined concept called GRAID SupremeRAID™. This innovative solution unlocks 100% of the performance of NVMe SSD drives, without sacrificing data security.

New GRAID solution with high performance

While traditional RAID technologies generated bottlenecks on SSDs, the new GRAID solution develops a new hardware/software system that overcomes this limitation on NVME SSD U.2 (2.5″) and PCI-E 4.0 disks.

GRAID SupremeRAID works by installing a virtual NVMe controller in the operating system (available on Windows Server and major LINUX distributions) and integrates a high-performance PCIe 4.0 device, which the processor can handle as a single device, without sacrificing performance, so important in HPC systems.

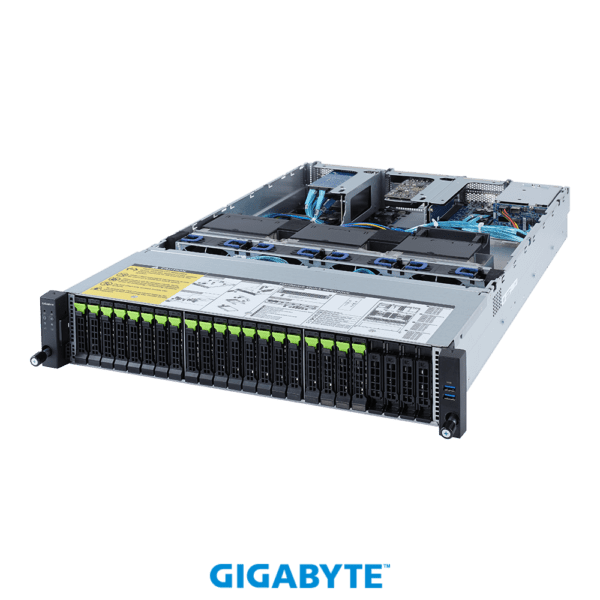

This technology is already present in the SIE Ladon All-flash 4.0, which offers up to 285 TB net, with a performance of up to 6 million IOPS and 100GB/s network throughput. It is based on the R282-Z9G platform , which can accommodate up to 20 NVME U.2 disks. PCI-E 4.0 and up to 128 cores with two AMD Milan processors and a network output, based on the Mellanox MCX653105A-EFAT card, which can deliver over PCI-E 4.0 100 Gb/s bus, either in Infiniband HDR 100 Gb/s technology or Ethernet at the same speed.

At this point, with equipment with up to 8 A100 cards with 80 GB of memory per NVLINK bus at 600 Gb/s or the new equipment with up to 10 A40 cards of 48 GB, both focused on Deep Learning (training) and Artificial Intelligence. For many of you, the question will arise that Ladon SIE systems in many cases are not stand-alone machines and are integrated within a cluster.

Indeed, this bandwidth at the internal level of the machines forces us to consider how to interconnect them.

First of all, European Computing Systems, as an NVIDIA Preferred Partner, is committed to Mellanox HDR Infiniband technology at 100 and 200 Gb/s, but also Ethernet compatible, in order to integrate these solutions within classic networks.

Infiniband involves communication packets, with headers and queues, much smaller than the Ethernet protocol, which allows parallelizing processes between machines. It also offers a latency, which is in the order of magnitude, of that of 2 processors on the same board.

However, in order to be able to perform processes without bottlenecks, for example in the case of GPUs, it has been necessary for the internal NVLINK bus of the devices to be extended between machines via the NVSWITCH protocol. This is possible through the Broadcom PCIe Switch hub, as shown in the following diagram.